Alternating Current (AC), Voltage, sinusoidal Waveform,

Alternating current (AC) unlike Direct current (DC) flows first in one direction then in the opposite direction. Current amperage The is function of time. Direct current (DC) amperage is constant.

The most common AC waveform is a sine (or sinusoidal) waveform. See figure 1.

Figure 1.

Instantaneous Current and Voltage

![]()

where I = instantaneous current in amperes

Ip = the maximum, or peak, current in amperes

f - Frequency. The frequency is the number of cycles that is completed each second. Unit of measure: hertz (Hz)

t - time

Other notation

![]()

The circuits illustrating all folowing electric circuits laws and circuit analysis techniques are included into Circuit Magic installation Visit main page to learn about Circuit Magic. (Symbolic electrical circuits simulation software to analyse circuits using Kirchhoff's Laws & other methods.)

s mentioned in the previous chapter, an alternating current is a current that changes with time. A specific class of current, and voltage for that matter, is the sinusoidal waveform. Sinusoidal waveforms are fundamental to radio. A Morse code signal, for example, is generated from a sinusoidal waveform. Sinusoidal waveforms are the basis behind AM, FM, and television signals as well.

[edit] Basic Characteristics of Sinusoidal Waveforms

There are three basic characteristics of sinusoidal waveforms (hereafter sinusoids): amplitude, frequency, and phase.

As shown in the diagram, the amplitude is the difference between the high value and the low value. The waveform may have different units, depending upon what the waveform is. If the waveform is measuring a voltage as a function of time, then the amplitude will be in Volts; if it were current as a function of time, amplitude would be in Amps. The frequency is equal to  and is a measure of how quickly the waveform cycles. If the waveform is a function of time, then frequency will usually be measured in Hertz (Hz). Since frequency is a measure of how rapidly the waveform cycles, frequency is sometimes (usually in older texts) given as cycles. One cycle is equal to one Hertz. λ is the inverse of frequency and is referred to as the period (usually a "T" is used to indicate the period.) Phase is a measure of how "offset" the signal is from some reference signal. Phase only makes sense when comparing signal of the same frequency, as otherwise phase will change as a function of time.

and is a measure of how quickly the waveform cycles. If the waveform is a function of time, then frequency will usually be measured in Hertz (Hz). Since frequency is a measure of how rapidly the waveform cycles, frequency is sometimes (usually in older texts) given as cycles. One cycle is equal to one Hertz. λ is the inverse of frequency and is referred to as the period (usually a "T" is used to indicate the period.) Phase is a measure of how "offset" the signal is from some reference signal. Phase only makes sense when comparing signal of the same frequency, as otherwise phase will change as a function of time.

Phasor (sine waves)

From Wikipedia, the free encyclopedia

In physics and engineering, a phase vector ("phasor") is a representation of a sine wave whose amplitude (A), phase (θ), and frequency (ω) are time-invariant. It is a subset of a more general concept called analytic representation. Phasors reduce the dependencies on these parameters to three independent factors, thereby simplifying certain kinds of calculations. In particular the frequency factor, which also includes the time-dependence of the sine wave, is often common to all the components of a linear combination of sine waves. Using phasors, it can be factored out, leaving just the static amplitude and phase information to be combined algebraically (rather than trigonometrically). Similarly, linear differential equations can be reduced to algebraic ones. The term phasor therefore often refers to just those two factors. In older texts, a phasor is also referred to as a sinor.

Contents[hide] |

[edit] Definition

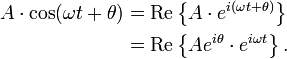

Euler's formula indicates that sine waves can be represented mathematically as the sum of two complex-valued functions:

or as the real part of one of the functions:

As indicated above, phasor can refer to either  or just the complex constant,

or just the complex constant,  . In the latter case, it is understood to be a shorthand notation, encoding the amplitude and phase of an underlying sinusoid.

. In the latter case, it is understood to be a shorthand notation, encoding the amplitude and phase of an underlying sinusoid.

An even more compact shorthand is angle notation:

The sine wave can be understood as the projection on the real axis of a rotating vector on the complex plane. The modulus of this vector is the amplitude of the oscillations, while its argument is the total phase ωt + θ. The phase constant θ represents the angle that the complex vector forms with the real axis at t = 0.

[edit] Phasor arithmetic

[edit] Multiplication by a constant (scalar)

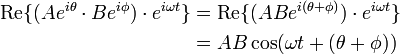

Multiplication of the phasor  by a complex constant,

by a complex constant,  produces another phasor. That means its only effect is to change the amplitude and phase of the underlying sinusoid:

produces another phasor. That means its only effect is to change the amplitude and phase of the underlying sinusoid:

In electronics,  would represent an impedance, which is independent of time. In particular it is not the shorthand notation for another phasor. Multiplying a phasor current by an impedance produces a phasor voltage. But the product of two phasors (or squaring a phasor) would represent the product of two sine waves, which is a non-linear operation that produces new frequency components. Phasor notation can only represent systems with one frequency, such as a linear system stimulated by a sinusoid.

would represent an impedance, which is independent of time. In particular it is not the shorthand notation for another phasor. Multiplying a phasor current by an impedance produces a phasor voltage. But the product of two phasors (or squaring a phasor) would represent the product of two sine waves, which is a non-linear operation that produces new frequency components. Phasor notation can only represent systems with one frequency, such as a linear system stimulated by a sinusoid.

[edit] Differentiation and integration

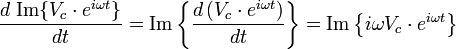

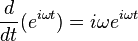

The time derivative or integral of a phasor produces another phasor[2]. For example:

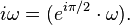

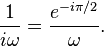

Therefore, in phasor representation, the time derivative of a sinusoid becomes just multiplication by the constant,  Similarly, integrating a phasor corresponds to multiplication by

Similarly, integrating a phasor corresponds to multiplication by  The time-dependent factor,

The time-dependent factor,  , is unaffected. When we solve a linear differential equation with phasor arithmetic, we are merely factoring

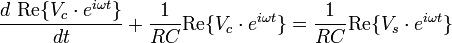

, is unaffected. When we solve a linear differential equation with phasor arithmetic, we are merely factoring  out of all terms of the equation, and reinserting it into the answer. For example, consider the following differential equation for the voltage across the capacitor in an RC circuit:

out of all terms of the equation, and reinserting it into the answer. For example, consider the following differential equation for the voltage across the capacitor in an RC circuit:

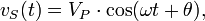

When the voltage source in this circuit is sinusoidal:

we may substitute:

where phasor  and phasor

and phasor  is the unknown quantity to be determined.

is the unknown quantity to be determined.

In the phasor shorthand notation, the differential equation reduces to[3]:

Solving for the phasor capacitor voltage gives:

As we have seen, the factor multiplying  represents differences of the amplitude and phase of

represents differences of the amplitude and phase of  relative to

relative to  and

and

In polar coordinate form, it is:

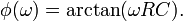

where

where

Therefore:

[edit] Addition

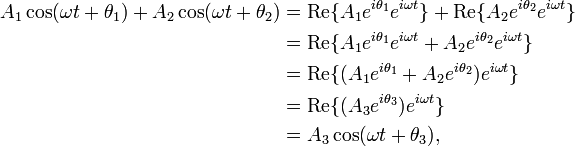

The sum of multiple phasors produces another phasor. That is because the sum of sine waves with the same frequency is also a sine wave with that frequency:

where:

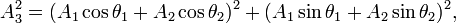

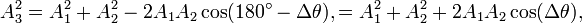

or, via the law of cosines on the complex plane (or the trigonometric identity for angle differences):

where Δθ = θ1 − θ2. A key point is that A3 and θ3 do not depend on ω or t, which is what makes phasor notation possible. The time and frequency dependence can be suppressed and re-inserted into the outcome as long as the only operations used in between are ones that produce another phasor. In angle notation, the operation shown above is written:

Another way to view addition is that two vectors with coordinates [A1 cos(ωt+θ1), A1 sin(ωt+θ1)] and [A2 cos(ωt+θ2), A2 sin(ωt+θ2)] are added vectorially to produce a resultant vector with coordinates [A3 cos(ωt+θ3), A3 sin(ωt+θ3)]. (see animation)

In physics, this sort of addition occurs when sine waves "interfere" with each other, constructively or destructively. The static vector concept provides useful insight into questions like this: "What phase difference would be required between three identical waves for perfect cancellation?" In this case, simply imagine taking three vectors of equal length and placing them head to tail such that the last head matches up with the first tail. Clearly, the shape which satisfies these conditions is an equilateral triangle, so the angle between each phasor to the next is 120° (2π/3 radians), or one third of a wavelength λ/3. So the phase difference between each wave must also be 120°, as is the case in three-phase power

In other words, what this shows is:

In the example of three waves, the phase difference between the first and the last wave was 240 degrees, while for two waves destructive interference happens at 180 degrees. In the limit of many waves, the phasors must form a circle for destructive interference, so that the first phasor is nearly parallel with the last. This means that for many sources, destructive interference happens when the first and last wave differ by 360 degrees, a full wavelength λ. This is why in single slit diffraction, the minima occurs when light from the far edge travels a full wavelength further than the light from the near edge.

[edit] Phasor diagrams

Electrical engineers, electronics engineers, electronic engineering technicians and aircraft engineers all use phasor diagrams to visualize complex constants and variables (phasors). Like vectors, arrows drawn on graph paper or computer displays represent phasors. Cartesian and polar representations each have advantages.

[edit] Circuit laws

With phasors, the techniques for solving DC circuits can be applied to solve AC circuits. A list of the basic laws is given below.

- Ohm's law for resistors: a resistor has no time delays and therefore doesn't change the phase of a signal therefore V=IR remains valid.

- Ohm's law for resistors, inductors, and capacitors: V=IZ where Z is the complex impedance.

- In an AC circuit we have real power (P) which is a representation of the average power into the circuit and reactive power (Q) which indicates power flowing back and forward. We can also define the complex power S=P+jQ and the apparent power which is the magnitude of S. The power law for an AC circuit expressed in phasors is then S=VI* (where I* is the complex conjugate of I).

- Kirchhoff's circuit laws work with phasors in complex form

Given this we can apply the techniques of analysis of resistive circuits with phasors to analyze single frequency AC circuits containing resistors, capacitors, and inductors. Multiple frequency linear AC circuits and AC circuits with different waveforms can be analyzed to find voltages and currents by transforming all waveforms to sine wave components with magnitude and phase then analyzing each frequency separately, as allowed by the superposition theorem.

[edit] Power engineering

In analysis of three phase AC power systems, usually a set of phasors is defined as the three complex cube roots of unity, graphically represented as unit magnitudes at angles of 0, 120 and 240 degrees. By treating polyphase AC circuit quantities as phasors, balanced circuits can be simplified and unbalanced circuits can be treated as an algebraic combination of symmetrical circuits. This approach greatly simplifies the work required in electrical calculations of voltage drop, power flow, and short-circuit currents. In the context of power systems analysis, the phase angle is often given in degrees, and the magnitude in rms value rather than the peak amplitude of the sinusoid.

The technique of synchrophasors uses digital instruments to measure the phasors representing transmission system voltages at widespread points in a transmission network. Small changes in the phasors are sensitive indicators of power flow and system stability.

[edit] Footnotes

- ^

- i is the Imaginary unit (i2 = − 1).

- In electrical engineering texts, the imaginary unit is often symbolized by j.

- The frequency of the wave, in Hz, is given by ω / 2π.

- ^ This results from:

which means that the complex exponential is the eigenfunction of the derivative operation.

which means that the complex exponential is the eigenfunction of the derivative operation. - ^ Proof:

-

(

Since this must hold for all

, specifically:

, specifically:  it follows that:

it follows that:-

(

It is also readily seen that:

Substituting these into Eq.1 and Eq.2, multiplying Eq.2 by

and adding both equations gives:

and adding both equations gives: -